Author: tlewis

Wednesday May 8, 2013 – Cabling and Labeling

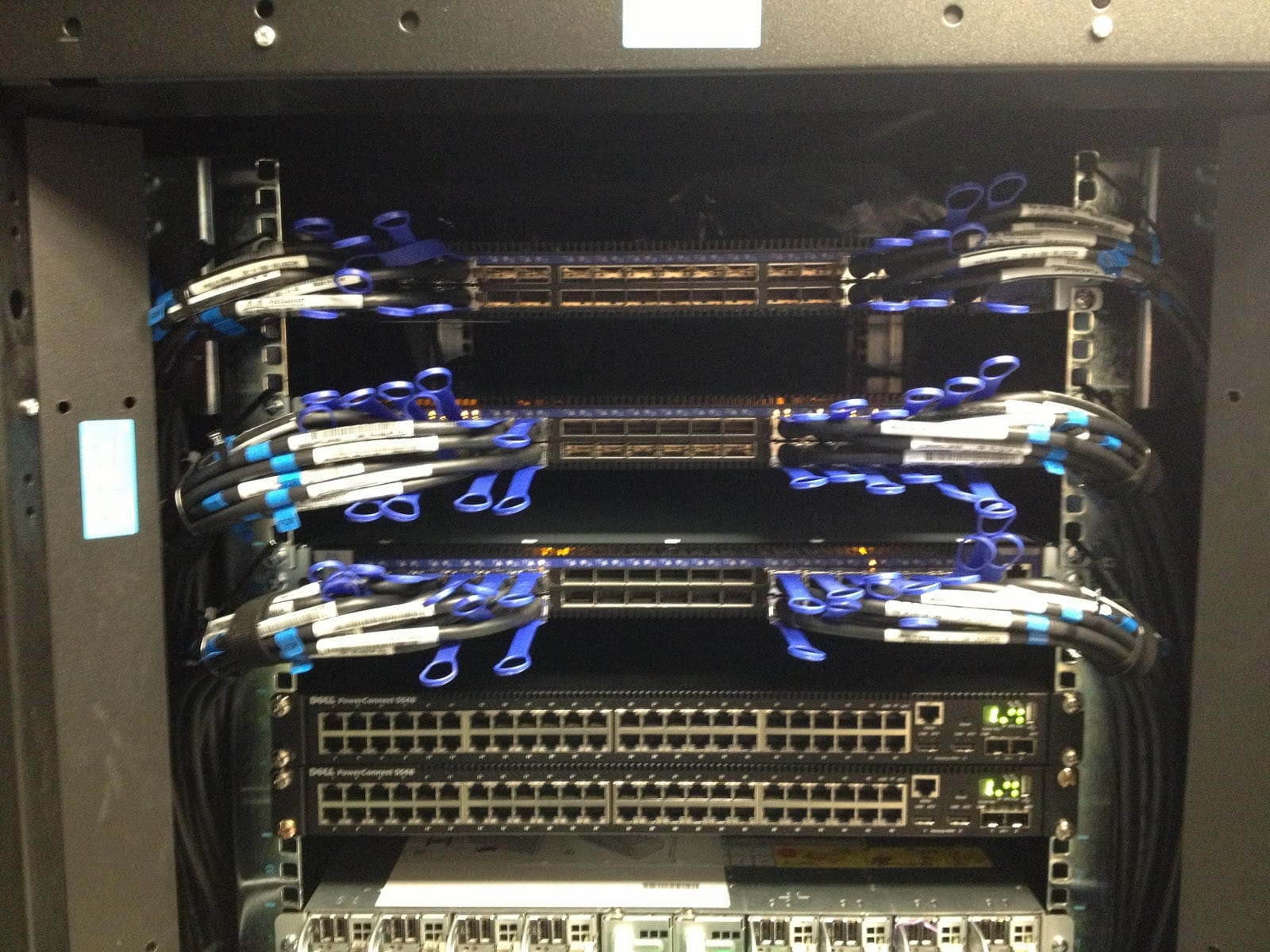

With all of the hardware physically installed, the team turned its focus to installing the Ethernet and InfiniBand cables for each cluster node. Each node is connected to a 1Gbps Ethernet Management Network for administrative functions such as operating system deployment, application deployment, and certain management commands. The primary communication method for each node is the InfiniBand Interconnect Network. Each node has one 56Gbps FDR InfiniBand connection used for inter-node communication and input/output for the storage systems .

The project team focused on the most effective cable management method to ensure proper airflow to the front of the Dell C8000 compute nodes.

The day completed with the cabling for all 96 compute nodes completed!

Tomorrow is the last day scheduled for physical hardware deployment. Plenty left to do to ensure the cluster is ready for its baseline configuration next Monday!

Tuesday May 7, 2013 – Hardware Installation and Power-On Tests

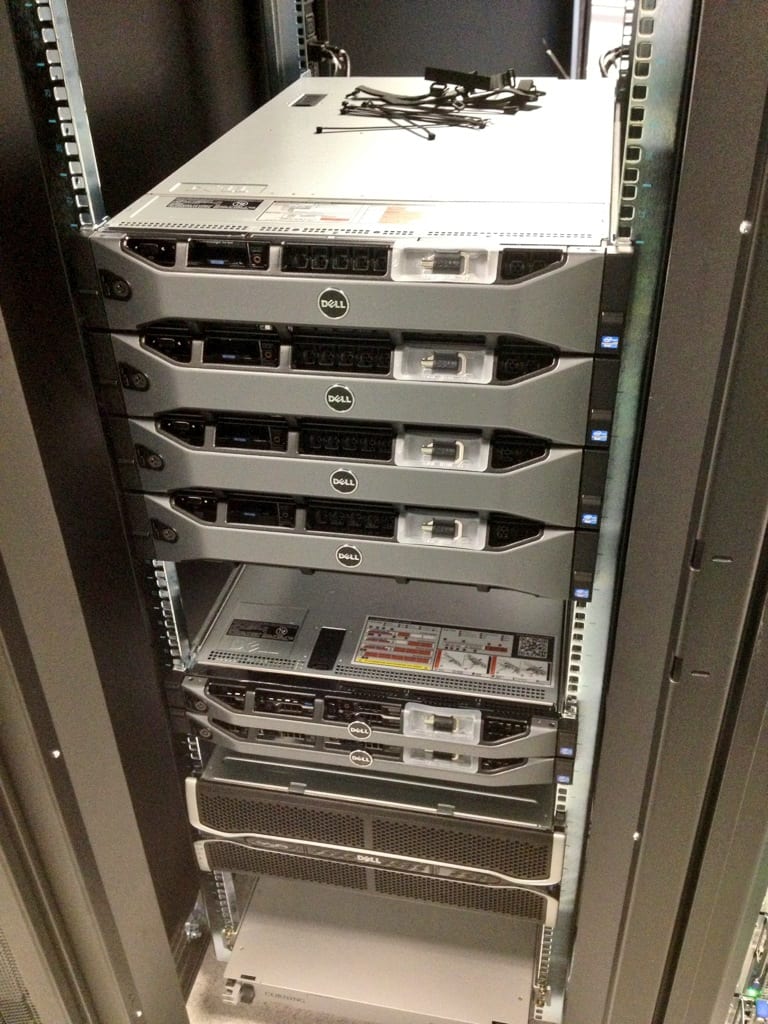

Today, physical installation of the server hardware continued. With all of the 16 blade enclosures and 96 compute nodes installed yesterday, the team focused on installing the cluster's 4 head nodes, Dell NSS primary storage system, and the Dell HSS Terascala Lustre scratch storage system.

The cluster's head nodes will primarily handle user logins, cluster management, resource scheduling, data transfers in and out, and interconnect network fabric management. Each head node is equipped with dual Intel Xeon E5-2670 2.6 GHz 8-core processors, 64GB of RAM, one non-blocking 56GBps FDR InfiniBand connection to the cluster's internal interconnect network, and two 10Gb fiber uplinks to the campus network core. Combined, the head nodes will have 80 Gbps of throughput to the GW network core! With this level of data throughput into the core, researchers will be poised to take advantage of GW's robust connectivity to the public Internet, the Internet2 research institution network, and the 100Gb inter-campus link planned for later this year.

As the storage systems are physically installed, the Dell COSIP team verifies each of the 96 compute nodes by individually powering and testing each node.

The cluster's primary storage system, Dell's NSS NFS storage solution, resides in Rack #4 and features non-blocking connectivity to the cluster's high-speed FDR Infiniband interconnect network and approximately 144TB of usable capacity. There's plenty of room to expand the storage system - each additional 4U storage chassis adds 144TB of usable capacity in a simple and highly cost effective manner.

After completing the storage system installation in Rack #4, the installers focused on the installation of the Dell Terascala HSS Lustre scratch storage system. The HSS solution will provide a high-speed parallel file system to support multi-node jobs efficiently and effectively. In its current configuration, the solution supports up to 6.2 GB/s of read performance and 4.2 GB/s write performance. Both the storage capacity and performance can be expanded relatively easily by adding storage enclosures and additional object storage server (OSS) pairs.

With all of the major hardware installed, the Dell Team turns to installing, labeling, and managing cables. With 192 InfiniBand and Ethernet cables coming from the 96 compute nodes, this is no small task. Because of the amount of heat produced by the compute nodes, airflow is essential. Proper cable management will aid in establishing proper airflow for each cluster component.

Day two of the installation wrapped up around 6:30 PM. Great progress has been made in the physical deployment. Tomorrow the team will focus on running cables for the management and interconnect networks.

Video Update: May 7, 2013 Time-lapse All Cameras

Video Update: May 6, 2013 Time-lapse All Cameras

Video Update: May 6, 2013 Staging Area Time-lapse

Video Update: May 6, 2013 Front Cabinet Time-lapse

Video Update: May 6, 2013 Rear Cabinet Time-lapse

Monday, May 6, 2013 – Hardware Rack and Stack

On Monday morning, the GW and Dell project teams began the physical deployment of the Colonial One cluster hardware. In addition, Dell dispatched a team of 4 from their COSIP group (customer on-site inspection) to monitor and maintain quality of the installation.

The team prepared the server cabinets by adjusting rack rails and power distribution units (PDUs) before beginning the install of the actual blade enclosures. Because of the number of cables required for each node, careful attention was paid to the spacing of the rack rails ensuring enough space on the front of the blade enclosures for cables and enough space on the rear for proper airflow.

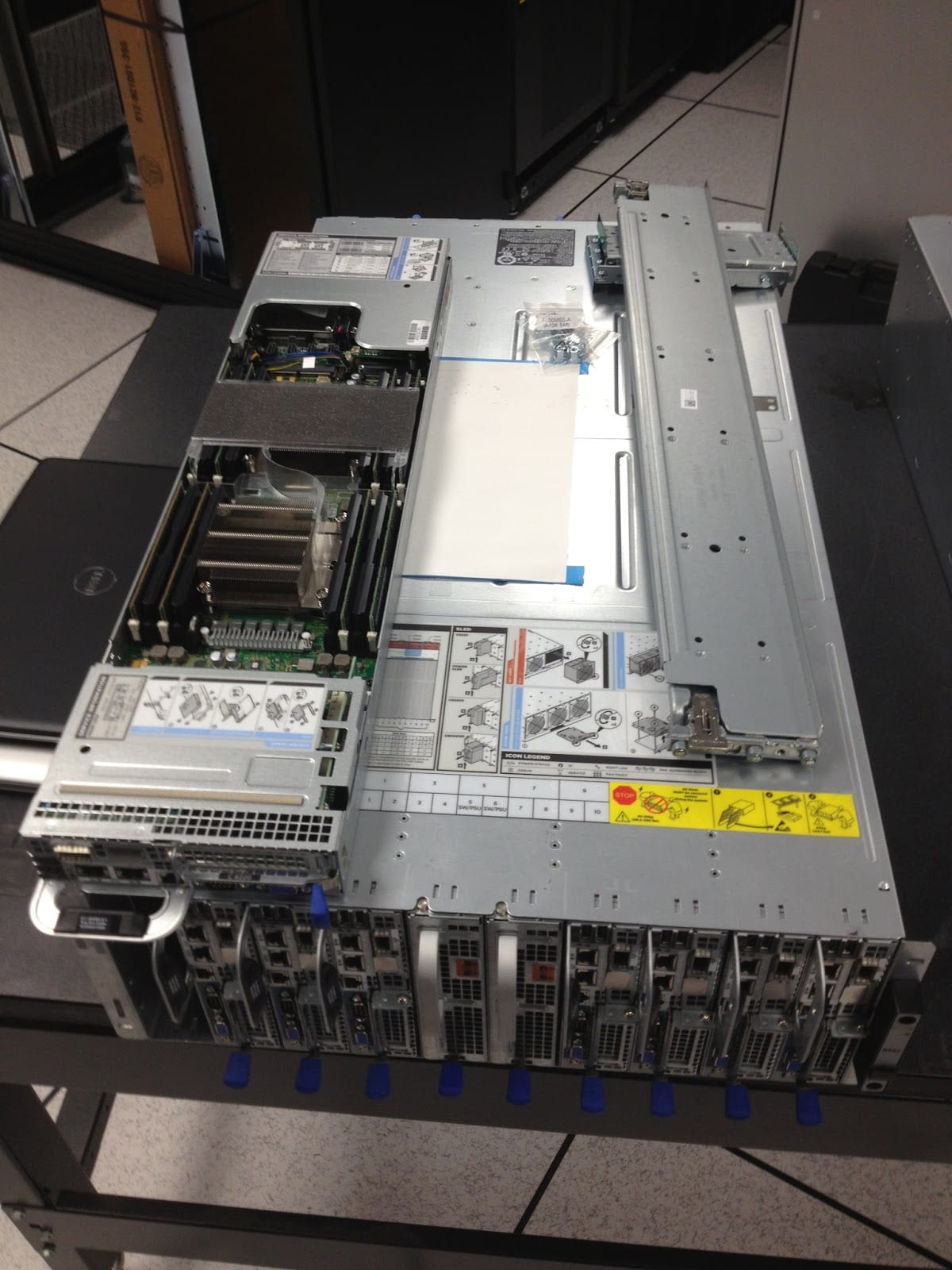

While half of the team prepared the server cabinets, the other half began unpacking and prepping the blade enclosures and blade servers.

The Dell C8220 blade server required an on-site BMC cable installation to move the Ethernet port from the rear to the front, so each blade had to be opened and modified. The care and attention paid to this process by the Dell Team makes the installation appear like a work of art.

With the cabinet prepped, the blade server enclosures are loading in one by one - 8 in each cabinet!

Day one is complete!

GW Marketing and Creative Services Installs HPC Cabinet “Skins”

On Wednesday, May 1, 2013, the Colonial One project kicked off with some spiffy cabinet skins designed by the GW Marketing and Creative Services team. With the custom science-themed graphics, the Colonial One server cabinets now look as cool as the technology inside them! Check out the photos below and the time-lapse video available here