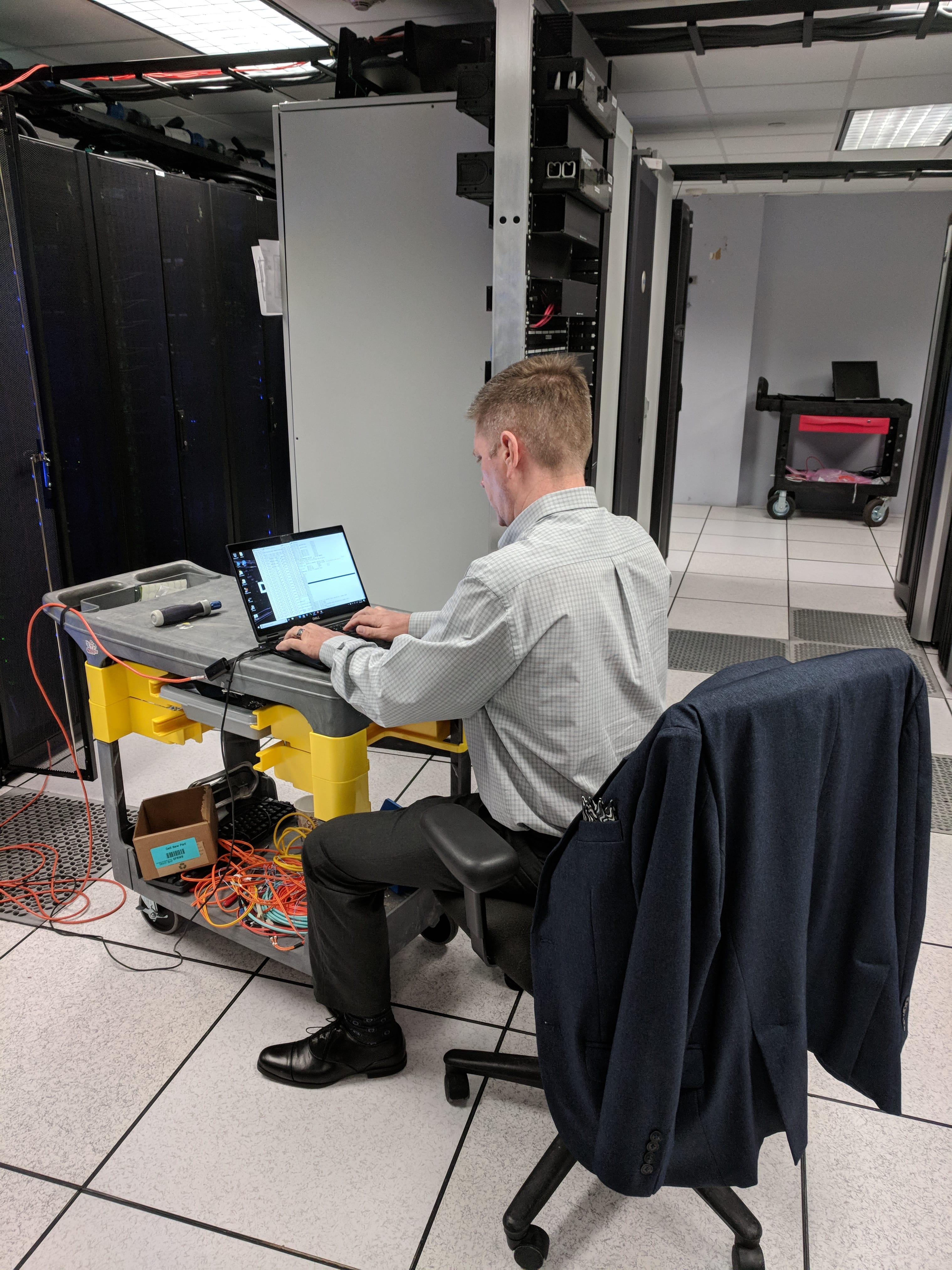

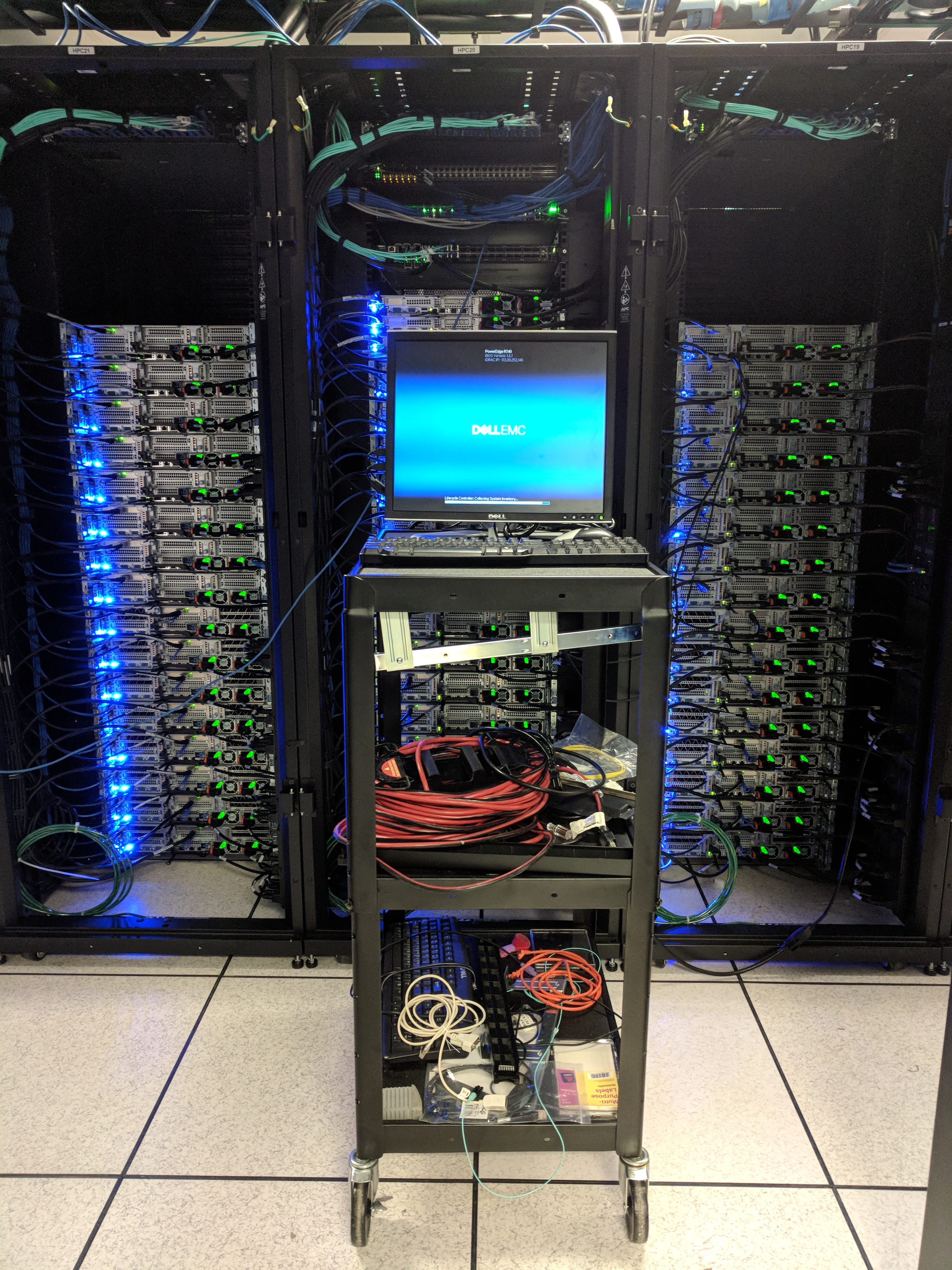

Today marks the last day the Dell and DDN teams will be on site meaning the end of deployment.

After a long day the team was able to complete:

- Lustre configuration

- Verified and checked both GPFS and Luster are good to go

- Have the motherboard was replaced on an additional node. Updated firmware and reapplied BIOS settings after the replacement was complete.

- Completed final rack pair Linpack tests.

- Connected entire cluster to GPFS and Lustre.