Today is the launch event for the Colonial One cluster! We're very excited to have Ali Eskandarian, Dean of the College of Professional Studies and theoretical physicist, host this event featuring comments from Vice President Leo Chalupa, Chief Information Officer Dave Steinour, Dr. Diana Lipscomb, and Dr. Keith Crandall.

Today's event will focus on GW's new approach to high performance research computing and feature current research in the Computational Biology Institute and the arts and sciences. Dr. Lipscomb and Dr. Crandall will discuss how Colonial One will play an integral role in supporting and expanding research at GW.

At the conclusion of the program, event attendees will have the opportunity to participate in a guided tour of the Virginia Science and Technology Campus Data Center to see the cluster and the infrastructure deployed to support it. Additionally, several faculty, students, and staff will showcase research posters demonstrating research enabled by high performance computing resources.

Event Details

Location: Enterprise Hall Executive Dining Room (2nd Floor) at the Virginia Science and Technology Campus

Time: 3PM - 5PM

Cluster Update

The initial configuration and physical deployment of the cluster was completed on May 17, 2013. When deploying complex computing clusters such as Colonial One, baseline configurations are only where the work begins. Over the past month, the project team has worked diligently with faculty in the physics and chemistry departments to benchmark, optimize, and thoroughly test the cluster. These two disciplines are important test cases as their jobs are often highly "parallelizable" with the ability to scale to a large number of nodes. Testing the cluster with these types of jobs helps to identify any configuration issues and I/O bottlenecks. The issues and optimization opportunities realized by working with these types of users will benefit all users later this year when the cluster moves into full production.

To date, we have worked with Andrei Alexandru's Physics Lattice QCD group, Hanning Chen's Theoretical and Computational Chemistry group, and Houston Miller's research group.

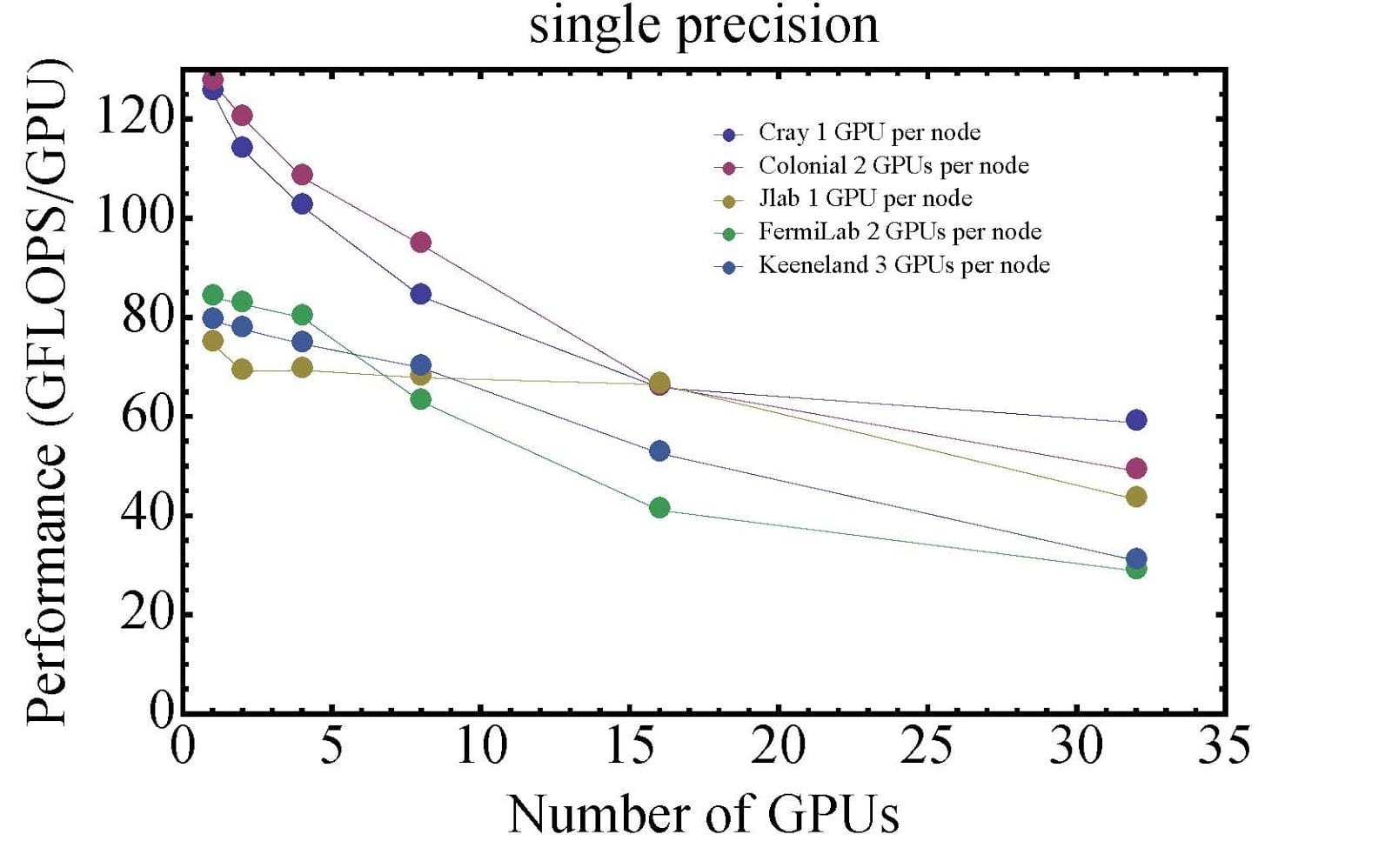

Lattice QCD Cluster Performance Comparison

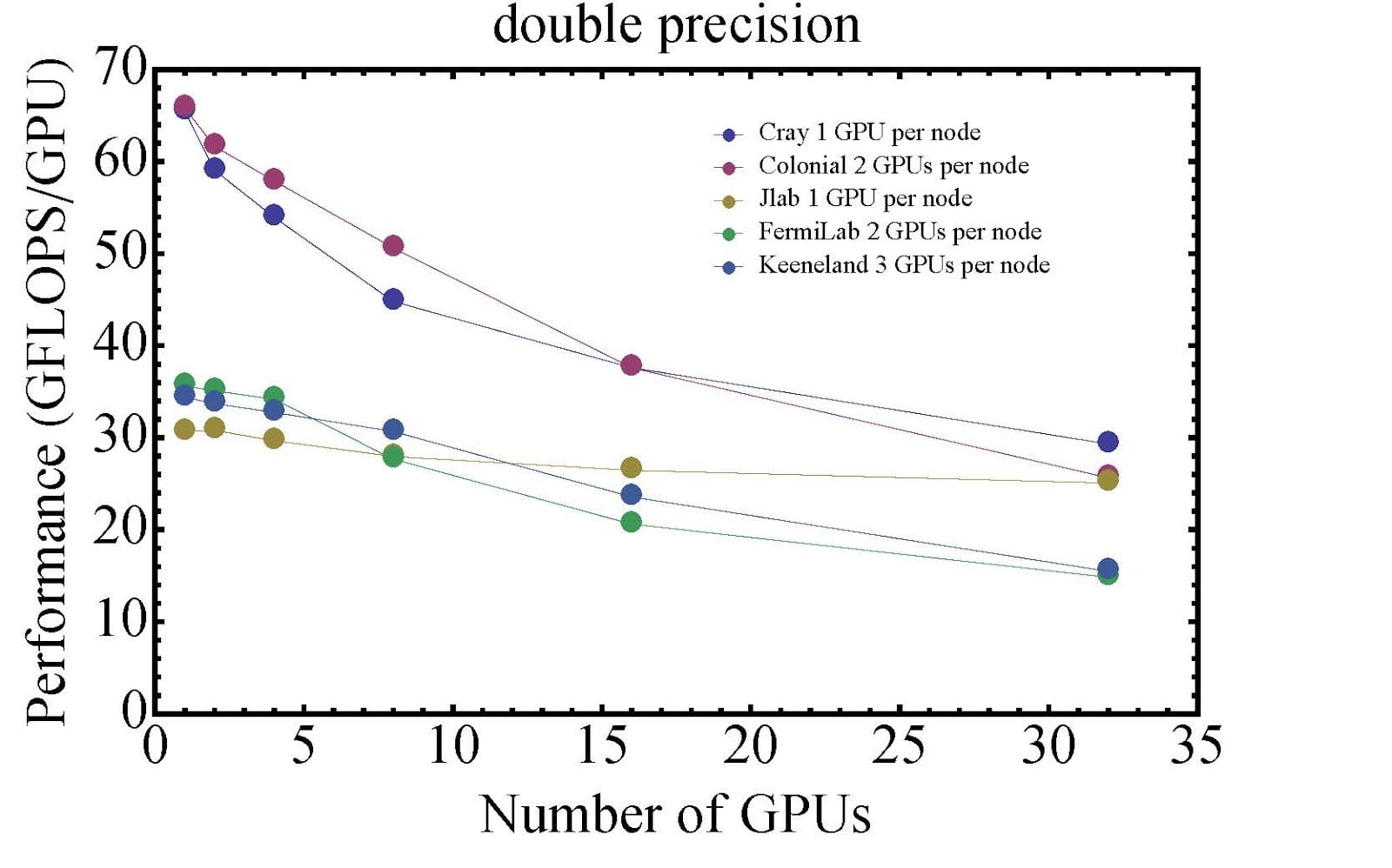

Mike Lujan, a graduate student working in the Lattice QCD group, spent some time comparing code performance on Colonial One's NVIDIA GPU-enabled nodes with several other clusters. The Lattice QCD group's code is designed for multi-GPU and multi-CPU calculations. Due to node-to-node communication and the performance degradation when scaling a job to multiple nodes, the overall performance drops as the job uses more nodes. This is one of the reasons the GPU nodes installed in Colonial One feature dual NVIDIA KEPLER K20 GPUs, doubling the density of GPUs per node.

Here are the results of Mike's tests running single and double precision jobs:

As you can see, Colonial One ranks #1 in performance up until the number of GPUs reaches 15. The Cray cluster noted is part of GW IMPACT, also known as "George." The performance gap between the top two performers and the bottom three is related to different GPU models. Both George and Colonial One are equipped with the latest GPUs from NVIDIA (K20) featuring the new Kepler architecture while the other three clusters are equipped with previous generation NVIDIA Tesla GPUs featuring the older Fermi architecture. These performance comparisons are based entirely on the code used by the Lattice QCD group, and could be higher or lower for different applications.

Special thanks to Mike for taking the time to put these performance comparisons together!